Executive Summary

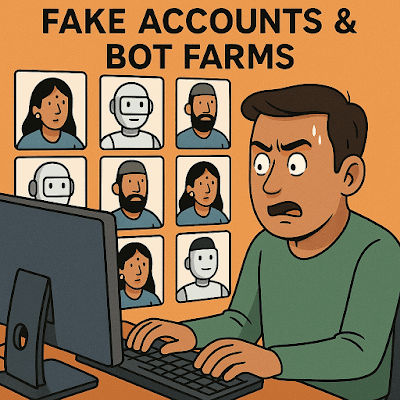

In today’s hyper-connected world, social media platforms have become battlegrounds for hearts, minds, and public opinion. Malicious actors deploy fake accounts (human-run profiles masquerading as genuine users) and bot farms (automated scripts posting at scale) to push narratives, sow discord, and amplify misinformation. This report examines how these operations function, highlights real-world case studies, analyzes their societal impact, and offers practical steps to detect and mitigate their influence.

- 1. Introduction

- 2. Anatomy of a Fake-Account Operation

- 3. Narrative-Building Tactics

- 4. Case Studies

- 4.1 Pakistan-Linked Hindu-Profile Campaigns

- 4.2 Bangladesh-Origin Hate Mills

- 4.3 China’s “50-Cent” Bot Networks

- 5. Societal & Democratic Impact

- 6. Detection & Verification

- 7. Mitigation Strategies

- 8. Recommendations for The News Drill

- 9. Conclusion

1. Introduction

-

Objective: Unpack the tactics behind fake accounts and bot farms, show how they skew online discourse, and equip readers with tools to recognize and resist manipulation.

-

Scope: Focus on South Asian contexts (India, Pakistan, Bangladesh) with nods to global examples (China’s influence operations).

2. Anatomy of a Fake-Account Operation

2.1 Fake Human-Run Accounts

-

Creation: Actors register dozens or hundreds of profiles using stolen or AI-generated photos and plausible names (e.g., “Rohit Sharma,” “Priya Patel”).

-

Behaviour: These accounts like, share, and comment on target posts—often at strategic times (e.g., after a breaking news event) to steer sentiment.

2.2 Automated Bot Farms

-

Infrastructure: Thousands of lightweight scripts running on rented servers or hijacked IoT devices.

-

Capabilities:

-

Mass Posting: Flood hashtags and comment sections with identical or slightly varied messages.

-

Simultaneous Engagement: Like/retweet posts within seconds, boosting visibility in algorithms.

-

Network Effects: Bots follow and amplify each other, creating the illusion of broad support.

-

3. Narrative-Building Tactics

| Tactic | Description |

|---|---|

| Hashtag Hijacking | Infiltrating trending hashtags (e.g., #DelhiRiots) with propaganda or coordinated messaging to redirect public discourse. |

| Persona Mimicry | Using fake profiles with local names and identities (e.g., Hindu profiles operated by foreign users) to manipulate communal or nationalistic sentiments. |

| Astroturfing | Creating the illusion of grassroots support by coordinating posts from fake accounts or bots. |

| Echo Chambers | Building isolated networks where only manipulated or biased content circulates, reinforcing belief systems without exposure to dissenting facts. |

| Media Leaks | Circulating fabricated documents, screenshots, or audio clips to give the appearance of whistleblower-style exposure, often to discredit or slander opponents. |

4. Case Studies

4.1 Pakistan-Linked Hindu-Profile Campaigns

-

Modus Operandi: Profiles registered from Pakistani IP ranges using Hindu names; posted anti-government or anti-minority messages to erode trust within India.

-

Impact: Sparked communal tensions; forced local news outlets to issue clarifications.

4.2 Bangladesh-Origin Hate Mills

-

Modus Operandi: Bangladeshi operators impersonate Indian users to spread false stories of minority-on-majority violence, stoking polarization.

-

Impact: Several viral WhatsApp forwards were later debunked, but not before triggering village-level unrest.

4.3 China’s “50-Cent” Bot Networks

-

Modus Operandi: State-linked bots amplify pro-China narratives on topics like COVID-19, border tensions, and Kashmir.

-

Impact: Elevated government talking points into global trending topics; diluted critical coverage.

5. Societal & Democratic Impact

-

Erosion of Trust: Citizens become skeptical of legitimate news, eroding media credibility.

-

Polarization: Manufactured outrage deepens social divides.

-

Civic Confusion: Misinformation undermines informed decision-making (e.g., during elections or public health crises).

-

Legal and Ethical Quagmires: Platforms struggle to balance free speech with removal of malicious content.

6. Detection & Verification

Here are key signs and signals to help identify suspicious online activity by bots or fake accounts:

| Signal | What to Watch For |

|---|---|

| Account Age | Recently created profiles with minimal history, followers, or interactions. |

| Activity Patterns | 24/7 posting at regular, unnatural intervals — often automated behavior. |

| Content Uniformity | Identical comments or posts across many unrelated threads or topics. |

| Follower Networks | Bots tend to follow and retweet each other in closed loops or clusters. |

| Language Inconsistencies | Mismatched regional slang, improper translation, or unnatural tone suggesting automation or foreign control. |

Recommended Tools:

- Botometer: Analyze Twitter accounts for bot-like behavior.

- Reverse Image Search: Check if a profile photo appears elsewhere online (often a sign of fake identity).

- Hashtag Trackers: Use tools like Talkwalker or Brandwatch to see who is driving a trend and whether it’s organic.

- Network Analysis Tools: NodeXL or Gephi can help map and expose clusters of bots or fake networks.

7. Mitigation Strategies

-

Platform Policies: Advocate for stricter identity verification (phone, government ID).

-

Digital Literacy: Educate users on spotting bots and false personas.

-

Rapid Response Fact-Checks: Publish real-time debunks of viral claims.

-

Algorithmic Safeguards: Encourage platforms to throttle coordinated behaviour.

-

Legal Action: Support RTI and investigative journalism to expose operators.

8. Recommendations for The News Drill

-

Regular “Bot Watch” Column: Document emerging manipulation tactics.

-

Interactive Explainers: Visual guides on recognizing fake accounts.

-

Crowdsourced Reporting: Invite readers to flag suspicious profiles using a simple form.

-

Partnerships: Collaborate with fact-checkers (Alt News, BOOM) and digital-rights NGOs.

9. Conclusion

Fake accounts and bot farms represent a covert weapon in today’s information wars. By understanding their tactics, spotlighting real-world examples, and empowering readers with verification tools, The News Drill can lead the charge in restoring integrity to online discourse.

“In the battle for truth, awareness is the first line of defense.”