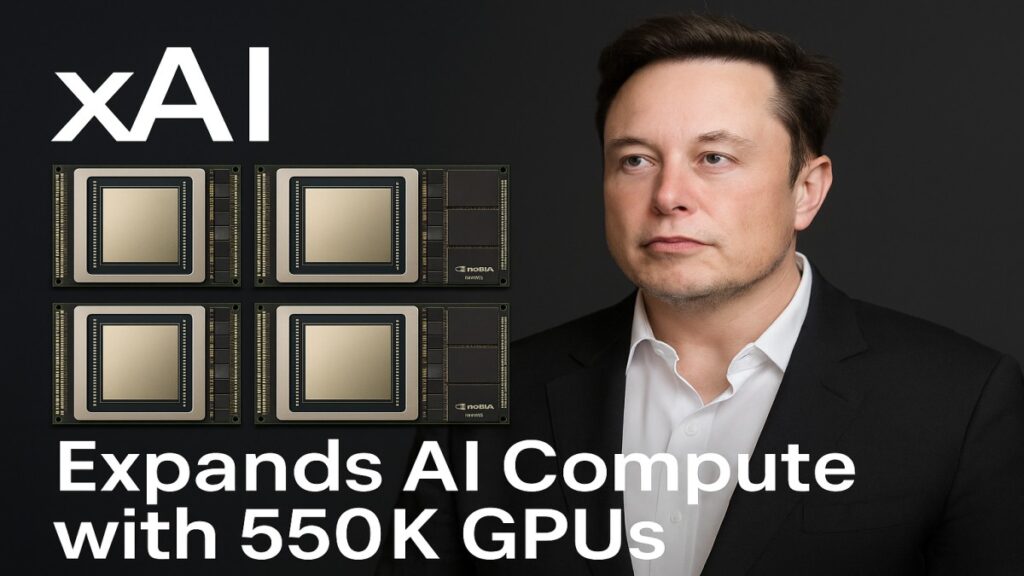

New York – In a transformative move that could redefine the global AI landscape, xAI expands AI compute infrastructure at an unprecedented scale. Founded by tech mogul Elon Musk, xAI is quickly becoming one of the most formidable players in the artificial intelligence race. Currently operating its powerful Colossus 1 supercluster, xAI has now unveiled plans to deploy 550,000 additional Nvidia GPUs for Colossus 2. This massive investment is part of its grand ambition to scale up to 50 million H100 equivalent compute units within the next five years.

This significant development not only highlights Musk’s growing dominance in AI but also signals a major shift in compute power availability, setting the stage for the evolution of cutting edge AI models like Grok 4 and the experimental Baby Grok.

xAI Expands AI Compute

The phrase xAI expands AI compute is becoming a central talking point across the global tech sector. Compute power is the fuel of modern AI. With larger models requiring more data and training parameters, organizations must scale their hardware infrastructures to remain competitive. xAI’s approach is clear: build the largest, most powerful training environment possible.

Elon Musk’s AI startup currently operates Colossus 1, a supercomputing cluster housing hundreds of thousands of Nvidia H100 GPUs, which powers its proprietary Grok chatbot model. But that’s just the beginning. The upcoming Colossus 2 is expected to dwarf its predecessor with the planned deployment of 550,000 new Nvidia GPUs.

This scale of compute deployment is virtually unmatched. When complete, it will place xAI at the forefront of AI infrastructure, competing directly with the likes of OpenAI, Google DeepMind, Anthropic, and Meta.

Inside the Colossus Architecture

Colossus 1: The Foundation

Colossus 1 serves as the current backbone of xAI’s model training and inference capabilities. Designed specifically to support large scale language models, it consists of:

- Hundreds of thousands of Nvidia H100 and A100 GPUs

- Advanced cooling and energy efficient server farms

- Tight integration with X (formerly Twitter) for real time data ingestion

Colossus 1 enables xAI to rapidly train and fine-tune models like Grok 3 and Grok 4 using massive datasets curated from social media and public internet repositories.

Colossus 2: The Next Frontier

With the announcement that xAI expands AI compute by introducing 550,000 new GPUs, Colossus 2 will likely become the largest AI-focused GPU cluster in the world. Features expected in Colossus 2 include:

- Exascale AI training capability

- Ultra high-bandwidth interconnects for seamless GPU communication

- Optimized architecture for multi-modal training (text, image, audio, video)

This makes Colossus 2 not just a compute expansion but a platform for the next generation of general purpose AI.

Why Is xAI Expanding AI Compute?

Elon Musk’s vision goes beyond just AI assistance. His long-term goal is to create Artificial General Intelligence (AGI) a system capable of human like reasoning, learning, and adaptability. In Musk’s own words:

“To reach AGI, you need a lot of data, a lot of models, and a lot of compute. xAI expands AI compute to make AGI possible not just probable.”

Here are the primary motivations behind the massive expansion:

1. Training Larger Grok Models: Future versions like Grok 5 and Grok 6 are expected to have trillions of parameters. Such models require vast compute power.

2. Competitive Pressure: OpenAI, Meta, and Google are all building massive clusters. xAI must scale to stay competitive.

3. Real Time AI for X: By integrating Grok directly into X, Musk wants a model that updates in real-time something only possible with continuous compute availability.

4. Autonomous Systems: Compute isn’t just for chatbots xAI’s models could power robotics, autonomous vehicles, and space navigation systems for companies like Tesla and SpaceX.

Nvidia’s Critical Role

At the heart of this AI compute revolution is Nvidia. The GPU manufacturer has become the backbone of AI infrastructure worldwide, and xAI’s reliance on H100 Tensor Core GPUs confirms that.

The H100, Nvidia’s most powerful AI GPU to date, is built for Transformer based models, making it ideal for Grok’s architecture.

Its capabilities in FP8 precision training, multi GPU scalability, and Tensor Parallelism are key reasons xAI chose it.

As xAI expands AI compute, it is also creating an unprecedented demand for Nvidia’s top tier GPUs possibly affecting global supply chains and influencing the GPU market’s economics.

Aiming for 50 Million H100 Equivalent Units

The ultimate objective is staggering: 50 million H100-equivalent compute units by 2030. For perspective, that’s more than 10 times the current AI infrastructure of OpenAI and Google combined.

Potential Implications:

A new AI arms race: Governments may begin responding to this massive private compute buildup.

Data Sovereignty concerns: If compute is centralized in a few hands, it raises ethical and regulatory questions.

AGI readiness: With such compute, Grok could move from a chatbot to a sentient AI agent in various domains.

The scale of ambition proves that the phrase “xAI expands AI compute” is more than a tech update it’s a roadmap to AI supremacy.

What Is Grok?

Grok is xAI’s proprietary conversational AI model, currently in its fourth generation (Grok 4). Initially launched as a competitor to ChatGPT, it is integrated directly into the X platform, offering users contextual and real time replies, news synthesis, and intelligent search.

Key Features of Grok 4

- Contextual Learning: Grok 4 can maintain long conversations with better memory integration.

- Real Time Learning: Unlike ChatGPT, Grok pulls data from live posts and user interactions on X.

- Multi-modal Capabilities: Supports image recognition and summarization, and can interpret memes, videos, and charts.

- Open Ended Personality: Built to offer a humorous, sarcastic, or politically incorrect tone if asked.

Grok 4 also marks xAI’s foray into deep personalization, learning from user preferences and adapting responses based on social behavior.

Introducing Baby Grok

In tandem with Grok 4, xAI has been experimenting with a lightweight version: Baby Grok.

What is Baby Grok?

Baby Grok is a smaller language model designed for:

- Mobile and Edge Devices

- Low power environments

- IoT based smart assistants

Though it’s not as powerful as Grok 4, Baby Grok has been optimized for:

- Low latency interaction

- Minimal compute inference

- On device learning

This model may power future Tesla vehicles, SpaceX spacecrafts, or embedded systems in Musk’s wider tech ecosystem.

Competitive Landscape

As xAI expands AI compute, rival companies are watching closely. Here’s how others compare:

| Company | AI Model | GPUs Deployed | Peak Compute Goal |

|---|---|---|---|

| xAI | Grok | 700K+ (by 2025) | 50M H100 Eq. |

| OpenAI | GPT-4.5 & 5 | 250K | 10M+ by 2026 |

| Google DeepMind | Gemini 2.5 | 200K | 15M by 2027 |

| Meta | LLaMA 3 | 300K | 25M by 2030 |

| Anthropic | Claude 3.5 | 150K | 10M+ by 2026 |

The scale at which xAI expands AI compute suggests that Elon Musk is betting not just on competitiveness but on dominance.

Conclusion

The announcement that xAI expands AI compute with millions of new GPUs marks a turning point in the AI industry. With ambitions to reach 50 million H100 equivalent compute units and develop increasingly powerful models like Grok 4 and Baby Grok, xAI is establishing itself as a heavyweight in AI innovation.

By leveraging Elon Musk’s resources, integrating with X, and harnessing Nvidia’s best hardware, xAI is not just building chatbots it’s building the backbone of future intelligent systems. Whether this leads us to AGI or raises new ethical dilemmas, one thing is clear: the AI race has entered its next phase, and xAI is leading the charge.